探索一下docker跨主机通信

探索一下docker跨主机通信:

管中窥豹,,能理解理解k8s的网络组件到底是怎么样实现的!

我们先做几个小实验,了解一下需要些什么!!前面我也有写这个!!

veth pair

这个实验很简单:

这个实验很简单:

1,创建两个netns

[root@emporerlinux ~]# ip netns add ns1

[root@emporerlinux ~]# ip netns add ns22,创建一对 veth pair 对

[root@emporerlinux ~]# ip link add name veth-ns1 type veth peer name veth-ns23,把 pair 接口分别接入到不同netns

NS1

1,ns1中添加veth-ns1接口

[root@emporerlinux ~]# ip link set dev veth-ns1 netns ns1

[root@emporerlinux ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: veth-ns1@if3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 76:eb:70:3c:02:87 brd ff:ff:ff:ff:ff:ff link-netnsid 0

2,开启端口

[root@emporerlinux ~]# ip netns exec ns1 ip link set dev veth-ns1 up

[root@emporerlinux ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: veth-ns1@if3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 76:eb:70:3c:02:87 brd ff:ff:ff:ff:ff:ff link-netnsid 03,配置ns 1中的veth-ns1 接口ip

[root@emporerlinux ~]# ip netns exec ns1 ip addr add 10.1.2.1/24 dev veth-ns1

[root@emporerlinux ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: veth-ns1@if3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 76:eb:70:3c:02:87 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.2.1/24 scope global veth-ns1

valid_lft forever preferred_lft foreverNS2 同理:

1,ns2中添加veth-ns2接口

[root@emporerlinux ~]# ip link set dev veth-ns2 netns ns2

[root@emporerlinux ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: veth-ns2@if4: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 82:95:9d:6a:59:9b brd ff:ff:ff:ff:ff:ff link-netnsid 02,开启端口

[root@emporerlinux ~]# ip netns exec ns2 ip link set dev veth-ns2 up

[root@emporerlinux ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: veth-ns2@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:95:9d:6a:59:9b brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::8095:9dff:fe6a:599b/64 scope link

valid_lft forever preferred_lft forever3,配置ns2中veth-ns2接口的ip

[root@emporerlinux ~]# ip netns exec ns2 ip a a 10.1.2.2/24 dev veth-ns2

[root@emporerlinux ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: veth-ns2@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:95:9d:6a:59:9b brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.2.2/24 scope global veth-ns2

valid_lft forever preferred_lft forever

inet6 fe80::8095:9dff:fe6a:599b/64 scope link

valid_lft forever preferred_lft forever4,测试一下:

[root@emporerlinux ~]# ip netns exec ns2 ping -c2 10.1.2.1

PING 10.1.2.1 (10.1.2.1) 56(84) bytes of data.

64 bytes from 10.1.2.1: icmp_seq=1 ttl=64 time=0.059 ms

64 bytes from 10.1.2.1: icmp_seq=2 ttl=64 time=0.087 ms

--- 10.1.2.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1054ms

rtt min/avg/max/mdev = 0.059/0.073/0.087/0.014 ms5,但是你会发现本netns 无法ping 通本机,是因为没有开启lo接口

[root@emporerlinux ~]# ip netns exec ns2 ping -c2 10.1.2.2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

^C

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1045ms

6 ,需开启lo 环回接口

[root@emporerlinux ~]# ip netns exec ns2 ip link set lo up

[root@emporerlinux ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: veth-ns2@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:95:9d:6a:59:9b brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.2.2/24 scope global veth-ns2

valid_lft forever preferred_lft forever

inet6 fe80::8095:9dff:fe6a:599b/64 scope link

valid_lft forever preferred_lft forever

[root@emporerlinux ~]# ip netns exec ns2 ping -c2 10.1.2.2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

64 bytes from 10.1.2.2: icmp_seq=1 ttl=64 time=0.034 ms

64 bytes from 10.1.2.2: icmp_seq=2 ttl=64 time=0.041 ms

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1036ms

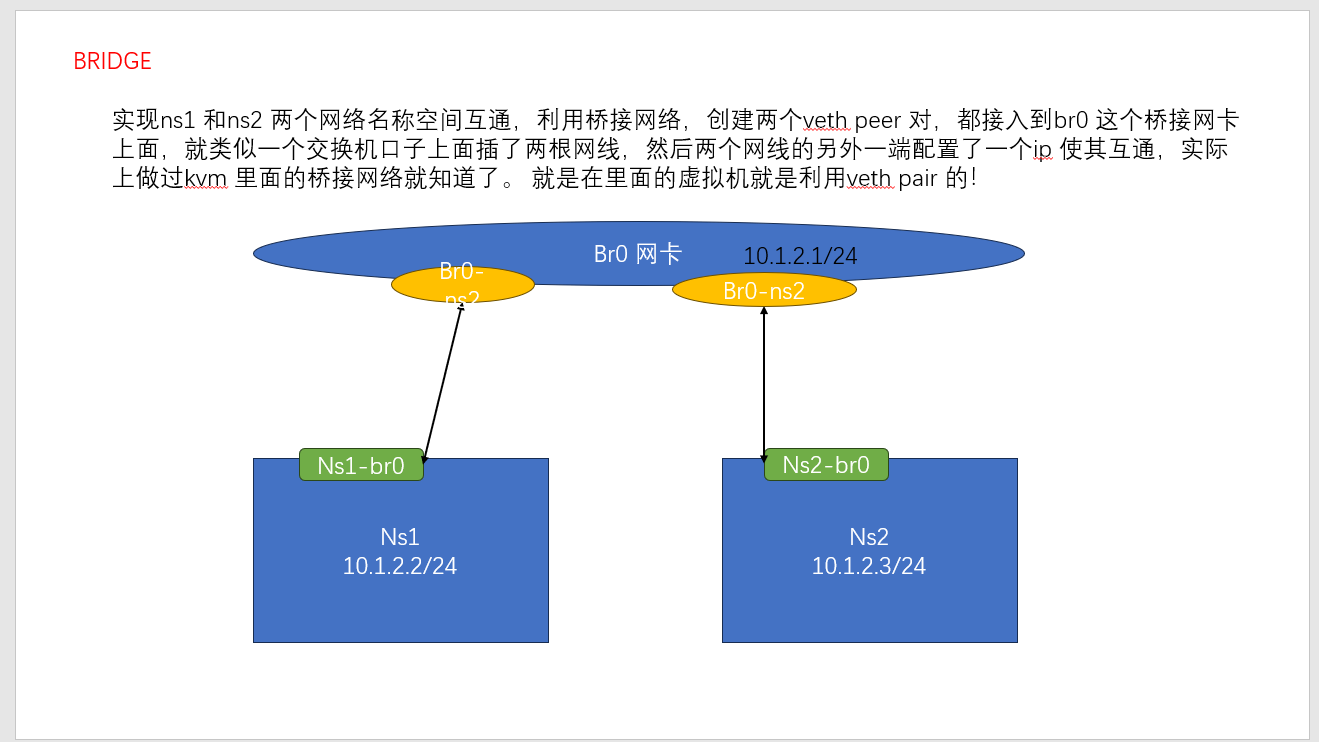

rtt min/avg/max/mdev = 0.034/0.037/0.041/0.007 msBRIDGE

直接贴上命令:看图理解一下

直接贴上命令:看图理解一下

[root@emporerlinux ~]# ip netns add ns1

[root@emporerlinux ~]# ip netns add ns2

[root@emporerlinux ~]# ip link add br0 type bridge

[root@emporerlinux ~]# ip link set br0 up

[root@emporerlinux ~]# ip a a 10.1.2.1/24 dev br0

[root@emporerlinux ~]# ip link add ns1-br0 type veth peer name br0-ns1

[root@emporerlinux ~]# ip link add ns2-br0 type veth peer name br0-ns2

[root@emporerlinux ~]# ip link set dev ns1-br0 netns ns1

[root@emporerlinux ~]# ip link set dev ns2-br0 netns ns2

[root@emporerlinux ~]# ip netns exec ns1 ip link set dev ns1-br0 up

[root@emporerlinux ~]# ip netns exec ns2 ip link set dev ns2-br0 up

[root@emporerlinux ~]# ip netns exec ns1 ip add add 10.1.2.2/24 dev ns1-br0

[root@emporerlinux ~]# ip netns exec ns2 ip add add 10.1.2.3/24 dev ns2-br0

[root@emporerlinux ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000000000000 no

[root@emporerlinux ~]# brctl addif br0 br0-ns1

[root@emporerlinux ~]# brctl addif br0 br0-ns2

[root@emporerlinux ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.22b7d7de8484 no br0-ns1

br0-ns2

[root@emporerlinux ~]# ip link set dev br0-ns1 up

[root@emporerlinux ~]# ip link set dev br0-ns2 up

[root@emporerlinux ~]# ip netns exec ns2 ping 10.1.2.1

PING 10.1.2.1 (10.1.2.1) 56(84) bytes of data.

64 bytes from 10.1.2.1: icmp_seq=1 ttl=64 time=0.100 ms

64 bytes from 10.1.2.1: icmp_seq=2 ttl=64 time=0.080 ms

^C

--- 10.1.2.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1045ms

rtt min/avg/max/mdev = 0.080/0.090/0.100/0.010 ms

[root@emporerlinux ~]# ip netns exec ns2 ping 10.1.2.2 -c2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

64 bytes from 10.1.2.2: icmp_seq=1 ttl=64 time=0.094 ms

64 bytes from 10.1.2.2: icmp_seq=2 ttl=64 time=0.139 ms

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.094/0.116/0.139/0.024 ms

[root@emporerlinux ~]# ping 10.1.2.2 -c 2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

64 bytes from 10.1.2.2: icmp_seq=1 ttl=64 time=0.101 ms

64 bytes from 10.1.2.2: icmp_seq=2 ttl=64 time=0.066 ms

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1034ms

rtt min/avg/max/mdev = 0.066/0.083/0.101/0.019 ms

[root@emporerlinux ~]# ping 10.1.2.3 -c 2

PING 10.1.2.3 (10.1.2.3) 56(84) bytes of data.

64 bytes from 10.1.2.3: icmp_seq=1 ttl=64 time=0.077 ms

64 bytes from 10.1.2.3: icmp_seq=2 ttl=64 time=0.086 ms

--- 10.1.2.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1033ms

rtt min/avg/max/mdev = 0.077/0.081/0.086/0.010 ms上面将的都是单主机不同netns 中的通信!

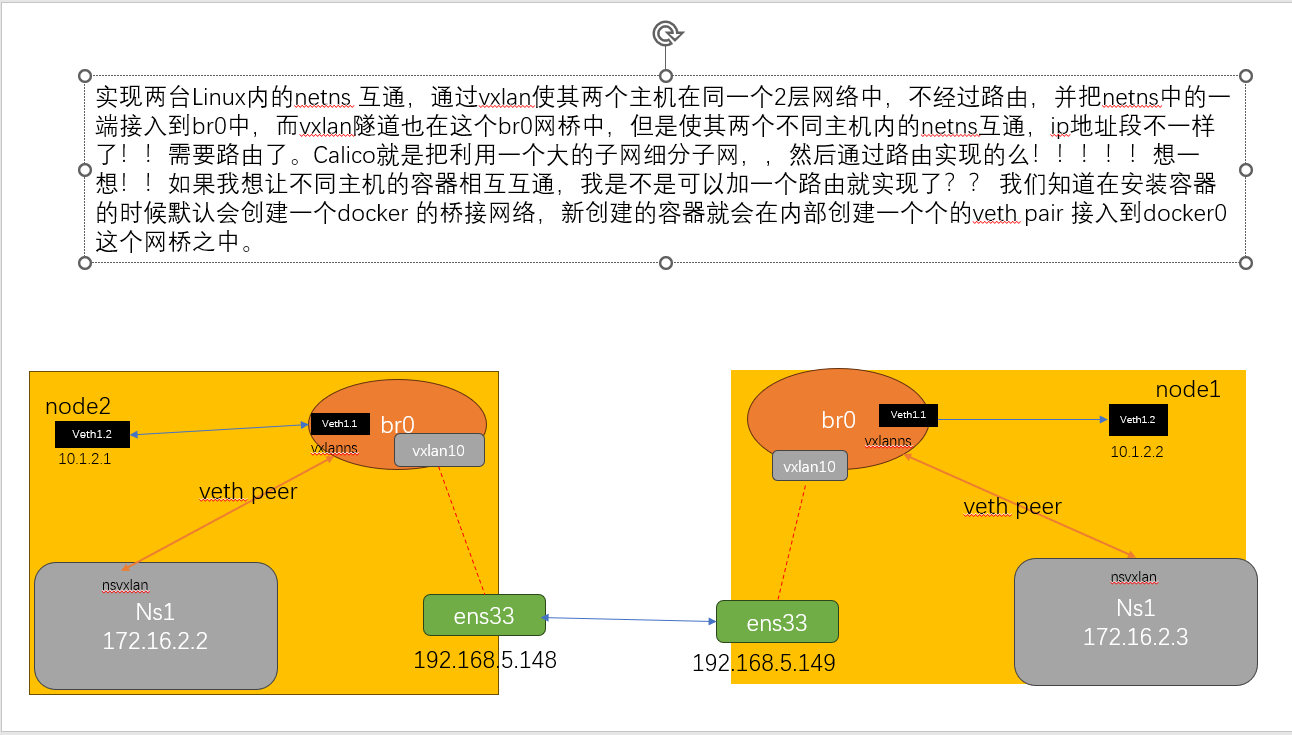

vxlan

利用vxlan 隧道。实现两个netns 通信

node1 192.168.5.149

1,创建桥接网卡br-vxlan

[root@node1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:58:83:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.149/24 brd 192.168.5.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::2942:9c0f:ad64:d61d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node1 ~]# ping 192.168.5.148 -c 1

PING 192.168.5.148 (192.168.5.148) 56(84) bytes of data.

64 bytes from 192.168.5.148: icmp_seq=1 ttl=64 time=0.261 ms

--- 192.168.5.148 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.261/0.261/0.261/0.000 ms

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

[root@node1 ~]# brctl addbr br-vxlan

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.000000000000 no2,创建vxlan 隧道,并加入到桥接br-vxlan网卡内

[root@node1 ~]# ip link add vxlan100 type vxlan id 100 remote 192.168.5.148 dstport 4789 dev ens33

[root@node1 ~]# brctl addif br-vxlan vxlan100

[root@node1 ~]#

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.dacc68b708dc no vxlan1003,启动这两张网卡

[root@node1 ~]# ip link set dev br-vxlan up

[root@node1 ~]# ip link set dev vxlan100 up4,创建veth pair

[root@node1 ~]# ip link add veth1.1 type veth peer name veth1.2

[root@node1 ~]# ip l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:58:83:50 brd ff:ff:ff:ff:ff:ff

3: br-vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether da:cc:68:b7:08:dc brd ff:ff:ff:ff:ff:ff

4: vxlan100: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br-vxlan state UNKNOWN mode DEFAULT group default qlen 1000

link/ether da:cc:68:b7:08:dc brd ff:ff:ff:ff:ff:ff

5: veth1.2@veth1.1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 1a:9f:4b:f4:3d:4d brd ff:ff:ff:ff:ff:ff

6: veth1.1@veth1.2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 4e:1d:1d:fa:55:aa brd ff:ff:ff:ff:ff:ff5,并把一端加入到br-vxlan 桥接网卡内,配置ip地址

[root@node1 ~]# brctl addif br-vxlan veth1.1

[root@node1 ~]# ip addr add 10.1.2.2/24 dev veth1.2

[root@node1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:58:83:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.149/24 brd 192.168.5.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::2942:9c0f:ad64:d61d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 4e:1d:1d:fa:55:aa brd ff:ff:ff:ff:ff:ff

inet6 fe80::d8cc:68ff:feb7:8dc/64 scope link

valid_lft forever preferred_lft forever

4: vxlan100: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br-vxlan state UNKNOWN group default qlen 1000

link/ether da:cc:68:b7:08:dc brd ff:ff:ff:ff:ff:ff

inet6 fe80::d8cc:68ff:feb7:8dc/64 scope link

valid_lft forever preferred_lft forever

5: veth1.2@veth1.1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 1a:9f:4b:f4:3d:4d brd ff:ff:ff:ff:ff:ff

inet 10.1.2.2/24 scope global veth1.2

valid_lft forever preferred_lft forever

6: veth1.1@veth1.2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop master br-vxlan state DOWN group default qlen 1000

link/ether 4e:1d:1d:fa:55:aa brd ff:ff:ff:ff:ff:ff

[root@node1 ~]# ip link set dev veth1.1 up

[root@node1 ~]# ip link set dev veth1.2 up node2 192.168.5.148

命令和node1 相同:

[root@node2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:69:fe:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.148/24 brd 192.168.5.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::935f:62cb:a737:87fb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node2 ~]# ping 192.168.5.149 -c 1

PING 192.168.5.149 (192.168.5.149) 56(84) bytes of data.

64 bytes from 192.168.5.149: icmp_seq=1 ttl=64 time=0.690 ms

--- 192.168.5.149 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.690/0.690/0.690/0.000 ms

[root@node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

[root@node2 ~]# brctl addbr br-vxlan

[root@node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.000000000000 no 创建vlan ,加入到br-vxlan 且开启

[root@node2 ~]# ip link add vxlan100 type vxlan id 100 remote 192.168.5.149 dstport 4789 dev ens33

[root@node2 ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:69:fe:02 brd ff:ff:ff:ff:ff:ff

3: br-vxlan: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 36:d8:c8:a6:8b:c4 brd ff:ff:ff:ff:ff:ff

4: vxlan100: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 56:6c:72:41:ae:bc brd ff:ff:ff:ff:ff:ff

[root@node2 ~]# ip -d link show vxlan100

4: vxlan100: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 56:6c:72:41:ae:bc brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 100 remote 192.168.5.149 dev ens33 srcport 0 0 dstport 4789 ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

[root@node2 ~]# brctl addif br-vxlan vxlan100

[root@node2 ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:69:fe:02 brd ff:ff:ff:ff:ff:ff

3: br-vxlan: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 56:6c:72:41:ae:bc brd ff:ff:ff:ff:ff:ff

4: vxlan100: <BROADCAST,MULTICAST> mtu 1450 qdisc noop master br-vxlan state DOWN mode DEFAULT group default qlen 1000

link/ether 56:6c:72:41:ae:bc brd ff:ff:ff:ff:ff:ff

[root@node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.566c7241aebc no vxlan100

[root@node2 ~]# ip link set dev br-vxlan up

[root@node2 ~]# ip link set dev vxlan100 up 创建veth pair 加入到br-vxlan 并把一端加入到br-vxlan 桥接网卡内,配置ip地址

[root@node2 ~]# ip link add veth1.1 type veth peer name veth1.2

[root@node2 ~]# brctl addif br-vxlan veth1.1

[root@node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.2e235d431025 no veth1.1

vxlan100开启网卡

[root@node1 ~]# ip addr add 10.1.2.2/24 dev veth1.2

[root@node2 ~]# ip link set dev veth1.1 up

[root@node2 ~]# ip link set dev veth1.2 up 测试一下:

[root@node1 ~]# ping 10.1.2.1

PING 10.1.2.1 (10.1.2.1) 56(84) bytes of data.

64 bytes from 10.1.2.1: icmp_seq=1 ttl=64 time=0.639 ms

64 bytes from 10.1.2.1: icmp_seq=2 ttl=64 time=0.470 ms

^C

--- 10.1.2.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.470/0.554/0.639/0.087 msnetns结合vxlan 两个主机内不同netns 相互通信:

结合上面的基础环境,在两台主机新建一个netns并创建一个veth pair

node1

[root@node1 ~]# ip netns add ns-vxlan

[root@node1 ~]# ip link add nsvxlan type veth peer name vxlanns

[root@node1 ~]# ip link set dev nsvxlan netns ns-vxlan

[root@node1 ~]# ip netns exec ns-vxlan ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8: nsvxlan@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether d6:93:0b:ba:8a:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@node1 ~]# ip netns exec ns-vxlan ip link set dev nsvxlan up

[root@node1 ~]# ip netns exec ns-vxlan ip add add 172.16.2.3/24 dev nsvxlan

[root@node1 ~]# ip link set dev vxlanns master br-vxlan

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.4e1d1dfa55aa no veth1.1

vxlan100

vxlanns

[root@node1 ~]# ip netns exec ns-vxlan ip link set dev nsvxlan up

[root@node1 ~]# ip link set dev vxlanns up

[root@node1 ~]# ip netns exec ns-vxlan ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8: nsvxlan@if7: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether d6:93:0b:ba:8a:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.2.3/24 scope global nsvxlan

valid_lft forever preferred_lft forevernode1 本机网络环境:

[root@node1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:58:83:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.149/24 brd 192.168.5.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::2942:9c0f:ad64:d61d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 4e:1d:1d:fa:55:aa brd ff:ff:ff:ff:ff:ff

inet6 fe80::d8cc:68ff:feb7:8dc/64 scope link

valid_lft forever preferred_lft forever

4: vxlan100: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br-vxlan state UNKNOWN group default qlen 1000

link/ether da:cc:68:b7:08:dc brd ff:ff:ff:ff:ff:ff

inet6 fe80::d8cc:68ff:feb7:8dc/64 scope link

valid_lft forever preferred_lft forever

5: veth1.2@veth1.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 1a:9f:4b:f4:3d:4d brd ff:ff:ff:ff:ff:ff

inet 10.1.2.2/24 scope global veth1.2

valid_lft forever preferred_lft forever

inet6 fe80::189f:4bff:fef4:3d4d/64 scope link

valid_lft forever preferred_lft forever

6: veth1.1@veth1.2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-vxlan state UP group default qlen 1000

link/ether 4e:1d:1d:fa:55:aa brd ff:ff:ff:ff:ff:ff

inet6 fe80::4c1d:1dff:fefa:55aa/64 scope link

valid_lft forever preferred_lft forever

7: vxlanns@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-vxlan state UP group default qlen 1000

link/ether 8a:16:86:40:d7:a8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::8816:86ff:fe40:d7a8/64 scope link tentative

valid_lft forever preferred_lft forevernode2

[root@node2 ~]# ip netns add ns-vxlan

[root@node2 ~]# ip link add nsvxlan type veth peer name vxlanns

[root@node2 ~]# ip link set dev nsvxlan netns ns-vxlan

[root@node2 ~]# ip link set dev vxlanns master br-vxlan

[root@node2 ~]# ip netns exec ns-vxlan ip add add 172.16.2.2/24 dev nsvxlan

[root@node2 ~]# ip link set dev vxlanns master br-vxlan

[root@node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-vxlan 8000.26761b6c4843 no veth1.1

vxlan100

vxlanns

[root@node2 ~]# ip link set dev vxlanns up

[root@node2 ~]# ip netns exec ns-vxlan ip link set dev nsvxlan up

[root@node2 ~]# ip netns exec ns-vxlan ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8: nsvxlan@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 0a:85:2f:4e:e1:d2 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.2.2/24 scope global nsvxlan

valid_lft forever preferred_lft forever

inet6 fe80::885:2fff:fe4e:e1d2/64 scope link

valid_lft forever preferred_lft forevernode2本机网络环境:

[root@node2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:69:fe:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.148/24 brd 192.168.5.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::935f:62cb:a737:87fb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 26:76:1b:6c:48:43 brd ff:ff:ff:ff:ff:ff

inet6 fe80::546c:72ff:fe41:aebc/64 scope link

valid_lft forever preferred_lft forever

4: vxlan100: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br-vxlan state UNKNOWN group default qlen 1000

link/ether 56:6c:72:41:ae:bc brd ff:ff:ff:ff:ff:ff

inet6 fe80::546c:72ff:fe41:aebc/64 scope link

valid_lft forever preferred_lft forever

5: veth1.2@veth1.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 62:85:58:1d:16:de brd ff:ff:ff:ff:ff:ff

inet 10.1.2.1/24 scope global veth1.2

valid_lft forever preferred_lft forever

inet6 fe80::6085:58ff:fe1d:16de/64 scope link

valid_lft forever preferred_lft forever

6: veth1.1@veth1.2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-vxlan state UP group default qlen 1000

link/ether 2e:23:5d:43:10:25 brd ff:ff:ff:ff:ff:ff

inet6 fe80::2c23:5dff:fe43:1025/64 scope link

valid_lft forever preferred_lft forever

7: vxlanns@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-vxlan state UP group default qlen 1000

link/ether 26:76:1b:6c:48:43 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::2476:1bff:fe6c:4843/64 scope link

valid_lft forever preferred_lft forever测试:

node1 的ns-vxlan netns 能ping 通node2 的netns ,但是无法ping 通 veth1.2. 10.1.2.1

[root@node1 ~]# ip netns exec ns-vxlan ping 172.16.2.2 -c 2

PING 172.16.2.2 (172.16.2.2) 56(84) bytes of data.

64 bytes from 172.16.2.2: icmp_seq=1 ttl=64 time=0.477 ms

64 bytes from 172.16.2.2: icmp_seq=2 ttl=64 time=0.612 ms

--- 172.16.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1028ms

rtt min/avg/max/mdev = 0.477/0.544/0.612/0.071 ms

[root@node1 ~]# ip netns exec ns-vxlan ping 10.1.2.1 -c 2

connect: 网络不可达其实是没有路由的原因。

加上两条静态路由

node1 添加路由

1,去往10.1.2.0/24 网段的路由从nsvxlan这个接口出去

2,去往17.16.2.0/24 网段的路由从veth1.2 这个接口出去

[root@node1 ~]# ip netns exec ns-vxlan ip route a 10.1.2.0/24 dev nsvxlan

[root@node1 ~]# ip netns exec ns-vxlan ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8: nsvxlan@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d6:93:0b:ba:8a:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.2.3/24 scope global nsvxlan

valid_lft forever preferred_lft forever

inet6 fe80::d493:bff:feba:8a02/64 scope link

valid_lft forever preferred_lft forever

[root@node1 ~]# ip route add 172.16.2.0/24 dev veth1.2

[root@node1 ~]# ip route

default via 192.168.5.2 dev ens33 proto static metric 100

10.1.2.0/24 dev veth1.2 proto kernel scope link src 10.1.2.2

172.16.2.0/24 dev veth1.2 scope link

192.168.5.0/24 dev ens33 proto kernel scope link src 192.168.5.149 metric 100

[root@node1 ~]# ip netns exec ns-vxlan ip route

10.1.2.0/24 dev nsvxlan scope link

172.16.2.0/24 dev nsvxlan proto kernel scope link src 172.16.2.3 node2 添加路由

[root@node2 ~]# ip netns exec ns-vxlan ip route a 10.1.2.0/24 dev nsvxlan

[root@node2 ~]# ip netns exec ns-vxlan ip r

10.1.2.0/24 dev nsvxlan scope link

172.16.2.0/24 dev nsvxlan proto kernel scope link src 172.16.2.2

root@node2 ~]# ip route add 172.16.2.0/24 dev veth1.2

[root@node2 ~]# ip route

default via 192.168.5.2 dev ens33 proto static metric 100

10.1.2.0/24 dev veth1.2 proto kernel scope link src 10.1.2.1

172.16.2.0/24 dev veth1.2 scope link

192.168.5.0/24 dev ens33 proto kernel scope link src 192.168.5.148 metric 100 再次测试:

[root@node1 ~]# ip netns exec ns-vxlan ping 172.16.2.2

PING 172.16.2.2 (172.16.2.2) 56(84) bytes of data.

64 bytes from 172.16.2.2: icmp_seq=1 ttl=64 time=0.475 ms

64 bytes from 172.16.2.2: icmp_seq=2 ttl=64 time=0.553 ms

^C

--- 172.16.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1064ms

rtt min/avg/max/mdev = 0.475/0.514/0.553/0.039 ms

[root@node1 ~]# ip netns exec ns-vxlan ping 10.1.2.2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

64 bytes from 10.1.2.2: icmp_seq=1 ttl=64 time=0.157 ms

64 bytes from 10.1.2.2: icmp_seq=2 ttl=64 time=0.054 ms

^C

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1042ms

rtt min/avg/max/mdev = 0.054/0.105/0.157/0.052 ms

[root@node2 ~]# ip netns exec ns-vxlan ping 10.1.2.2 -c 2

PING 10.1.2.2 (10.1.2.2) 56(84) bytes of data.

64 bytes from 10.1.2.2: icmp_seq=1 ttl=64 time=0.665 ms

64 bytes from 10.1.2.2: icmp_seq=2 ttl=64 time=0.566 ms

--- 10.1.2.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1025ms

rtt min/avg/max/mdev = 0.566/0.615/0.665/0.055 ms这个还没有写完。。我后续会结合pipwork 研究研究里面的网络,,还有calico 网络的networkpolicy .应该就是利用不同网络名称空间内的iptables 实现的。。。

开搞开搞!先给两台主机安装docker 跑一个busybox 做测试:

不想画图了!就简单说下我的想法,,其实就是pipework底层逻辑,

1,创建两个容器,分别跑在两台主机内。

2,创建两对 veth pair 分别接入到容器内和一个主机桥接网卡内,。

3,通过桥接网络或者vxlan实现两个容器网络互通且使用和宿主机相同网段

桥接

[root@node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e237bfce64b8 busybox "sh" 43 hours ago Up 43 hours busybox

[root@node2 ~]# cd /run/netns/

[root@node2 netns]# ls

[root@node2 netns]# ln -sf /var/run/docker/netns/bb8643a1c2a4 busybox

[root@node2 netns]# ls

busybox

[root@node2 netns]# ip netns ls

busybox (id: 0)

[root@node2 netns]# ip netns exec busybox ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@node2 netns]#